This page describes personal reseach projects. For research work conducted by students in my lab, please visit the Realtime Expressive Programming Lab (REPL).

-

Screamer

Code in Screamer creating a scene of infinitely repeating spheres textured with dots. Screamer is a programming language and live coding environment for experimenting with ray marching in audiovisual performances. While the language is idiosyncratic, it is designed to be as terse as possible while still enabling performers to experiment with constructive solid geometry and various audio-reactive effects.

publications:

videos:

-

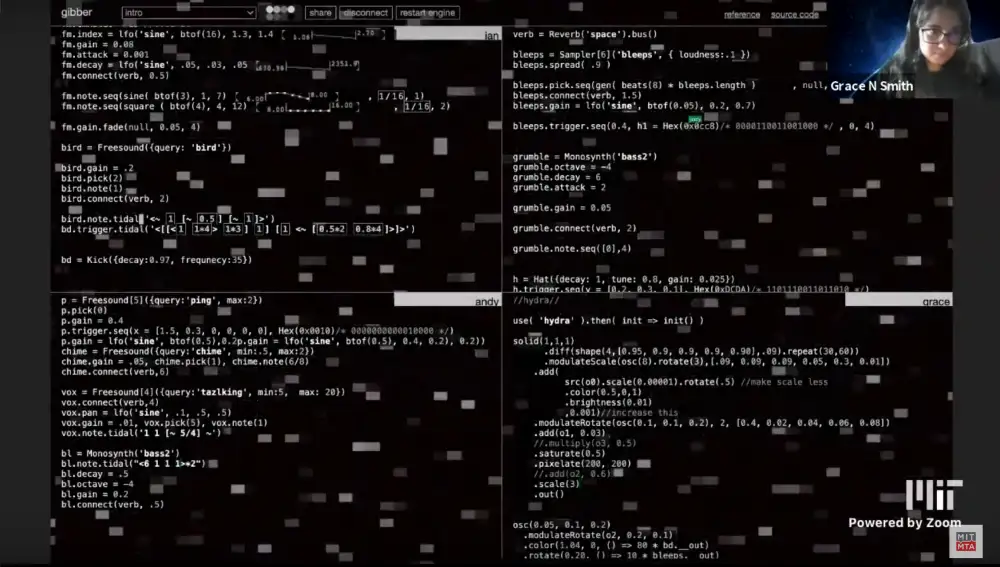

Gibber

four members of the MIT laptop ensemble giving a networked audiovisual performance in Gibber. Gibber is an audiovisual creative coding environment that runs in desktop browsers. It provides easy methods for people to sequence music, create 2D and 3D visual compositions, and link audio and visual elements together for interactive, multimodal performances. Gibber has been used to teach middle school, high school and college students computational media, with use in classes at over thirty universities.

publications:

videos:

-

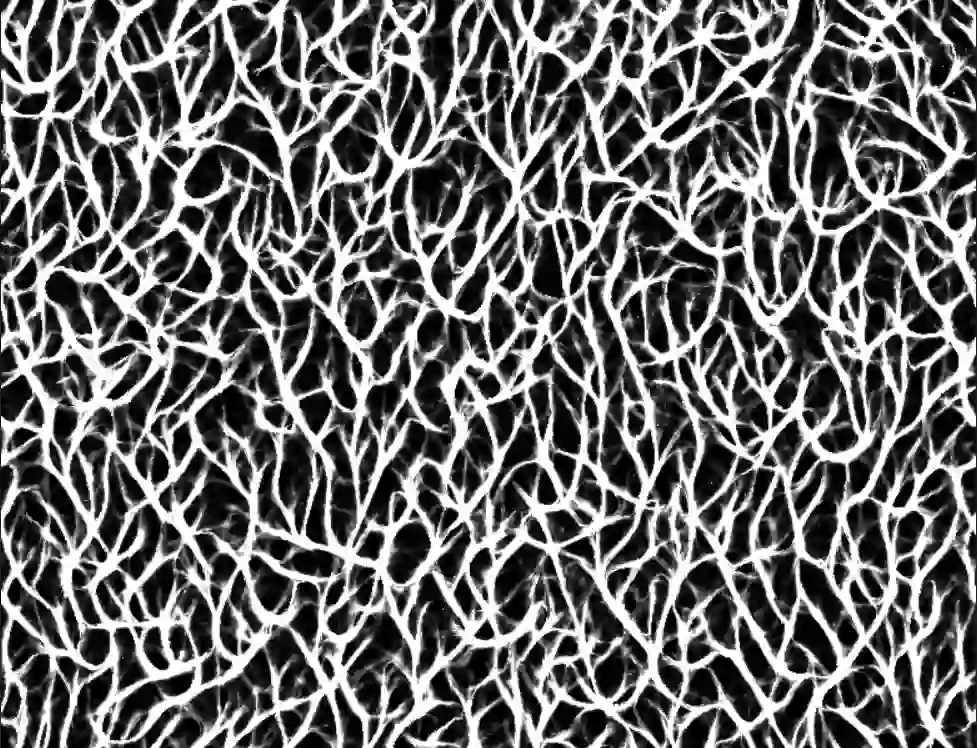

seagulls

slime mold simulation rendered using seagulls. [launch (chrome/edge only)] seagulls.js is a new unified framework for running and representing simulations on the GPU. It simplifies getting started with WebGPU without having to learn or write a bunch of boilerplate code, and provides a large number of demos as scaffolding so that users can build up to creating increasingly complex simulations.

-

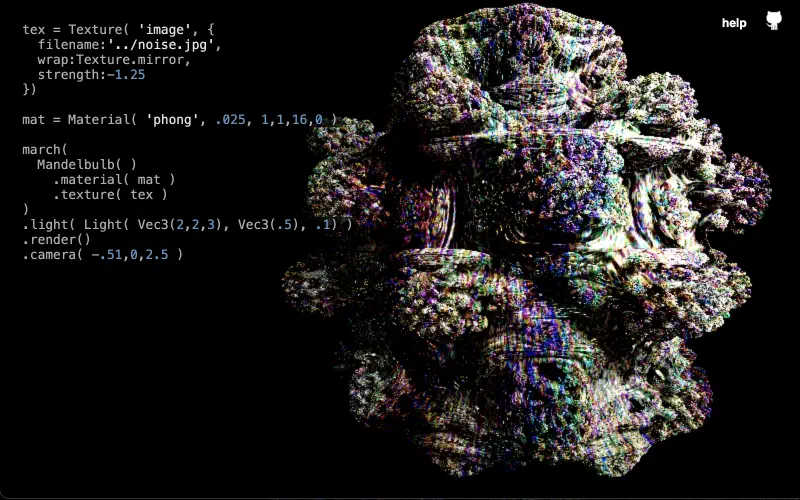

marching.js

textured fractal rendered using marching.js. [launch] marching.js is a JavaScript library that compiles GLSL raymarchers inspired by the demoscene. The goal of the project is to enable programmers who might not be proficient in GLSL to easily experiment with raymarching techniques, constrcutive solid geometry, and live-coding performance.

publications:

videos:

-

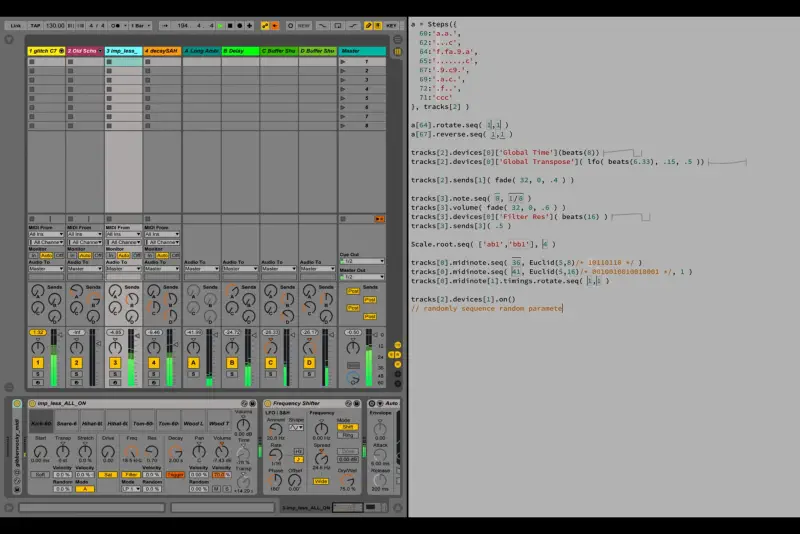

gibberwocky

live coding ableton live with gibberwocky. [watch video] gibberwocky is an live-coding environment that runs in the browser (Chrome for best results) but targets external applications, such as digital audio workstations. It provides deep integration with Ableton Live and/or Max/MSP, enabling users to easily define complex modulation graphs and alogrithmic pattern manipulations. gibberwocky was designed and developed in collaboration with Graham Wakefield.

publications:

videos:

-

genish.js

genish.js is a low-level JavaScript library for digital signal processing, with an emphasis on audio. It is a JavaScript port of the gen~ framework for Max/MSP with various additional features added. It features building blocks that can be combined to easily implement optimized audio synthesis algorithms; these building blocks are combined to form audio graphs that are then compiled into efficient JavaScript functions.

publications:

-

Gibberish.js

Gibberish.js is a JavaScript library for audio synthesis and signal processing. It features sample accurate sequencing, single-sample feedback and an efficient,utomatically generated audio graph. The library provides the audio capabilities for Gibber. The 2013 NIME publication (which also discussed Interface.js) received a Best Paper Award.

publications:

-

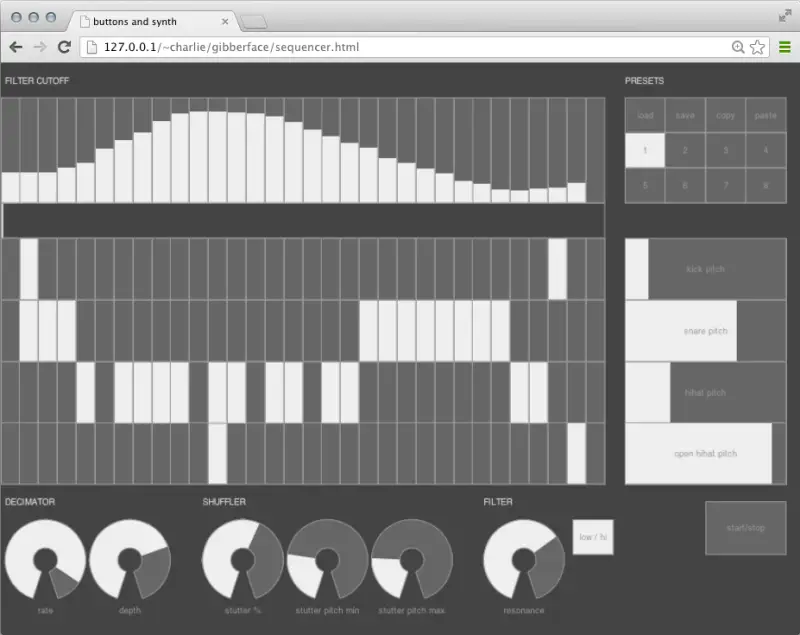

Interface.js

Drum sequencer interface made with interface.js. Interface.js is a JavaScript library enabling users to create 2D performance interfaces that run both on mobile devices and in desktop web browsers. The project also includes a server application that translates the messages generated by these interfaces into OSC or MIDI messages so that they can be used in conjunction with various audiovisual packages. The 2013 NIME publication (which also discussed Gibberish.js) received a Best Paper Award.

publications:

-

Control

Various interfaces running in Control. Control enables users to design their own touchscreen interfaces for controlling music, art, and virtual reality software. For many years, it was the only mobile interface software enabling end-user scripting, transmitting both wireless MIDI and OSC, and running on both iOS and Android devices; to the best of my knowledge it is also the only free and open-source platofmr to ever have these features. It was selected by Apple as a New and Noteworthy app for the week of 1/23/2011 and downloaded over 100,000 times.

publications:

press:

Archived projects:

-

Zoomorph

Comparing how a rat sees a flower to a deer. Zoomorph simulates animal perception of color by processing live video input on the GPU of mobile devices. The vision of over forty animals can be simulated; each animal has a set of RGB coefficients garnered from scientific articles. Photos can be taken using the processed video feed and subsequently saved to the device or posted to Facebook or Flickr. Zoomorph was developed with artists Lisa Jevbratt and Javier Villegas; I was responsible for iOS development.

-

Composition for Conductor and Audience

This audience participation piece let 20+ users use their personal mobile devices to control structured scenes of music. If users did not obey the wishes of the conductor, the conductor had the power to 'cut' them from the piece temporarily. The piece was an investigation to see if audience members would follow the conductor or simply do whatever they chose in an anonymous performance environment. [launch video]

-

Visual Metronome

Although no longer in active development, Visual Metronome was the first metronome in the App Store to keep accurate time and sold tens of thousands of copies from 2010–2016 before I changed it to be a free app. Many ideas from its UI have been copied by other iOS metronome applications. Learn more at the Visual Metronome website.

-

DeviceServer

The DeviceServer drives interactivity inside the AlloSphere Research Facility, a three-story spherical immersive instrument housed in the California NanoSystems Institute. While studying at UCSB I was responsible for enabling developers to easily utilize a wide variety of mobile, gaming and virtual reality devices. The Device Server provides this ability, allowing users to configure interactive controls and perform signal processing on control signals via JIT compiled Lua scripts. I continue to work on this project as an AlloSphere Research group affiliated researcher.

-

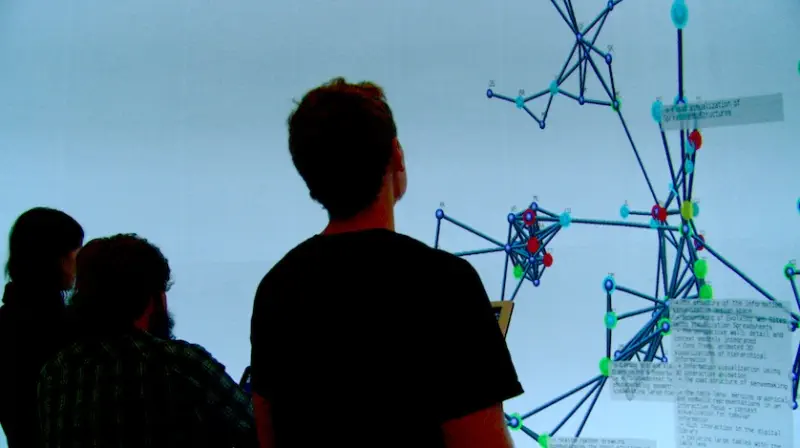

Multiuser Browsing of 3D Graphs

Users browsing a projected network graph on tablets. This research enables groups of users to collaboratively explore social network graphs. Personal tablet displays are used to select nodes in graphs and view associated data, while a shared large display (for our research we used the screen of the AlloSphere) shows the full graph and browsing history of all users. By providing on browser affordances on individual tablets we avoid occluding the shared display with individual node queries. Tablets also display a graphical approximation of the nodes currently displayed, helping provide an easy transition between the tablet and shared display.

-

Stereo

Stereo is a library for the Processing prototyping library that allows developers to easily create stereoscopic graphics, animations and virtual reality environments. Since Processing has a strong educational slant, the Stereo library was designed to be easy to use for novice programmers. It supports anaglyph, active and passive stereo projection systems.

Stereo was developed in collaboration with Angus Forbes and is freely available as open-source software.

-

midiStroke

midiStroke allows you to trigger keystrokes in the currently focused application using MIDI (Musical Instrument Digital Interface) note, program and CC messages. Each MIDI message can trigger an unlimited number of keystrokes in sequence. midiStroke is one of the first compiled applications I ever wrote; eight years later it is still downloaded thousands of times each year. press: Electronic Musician